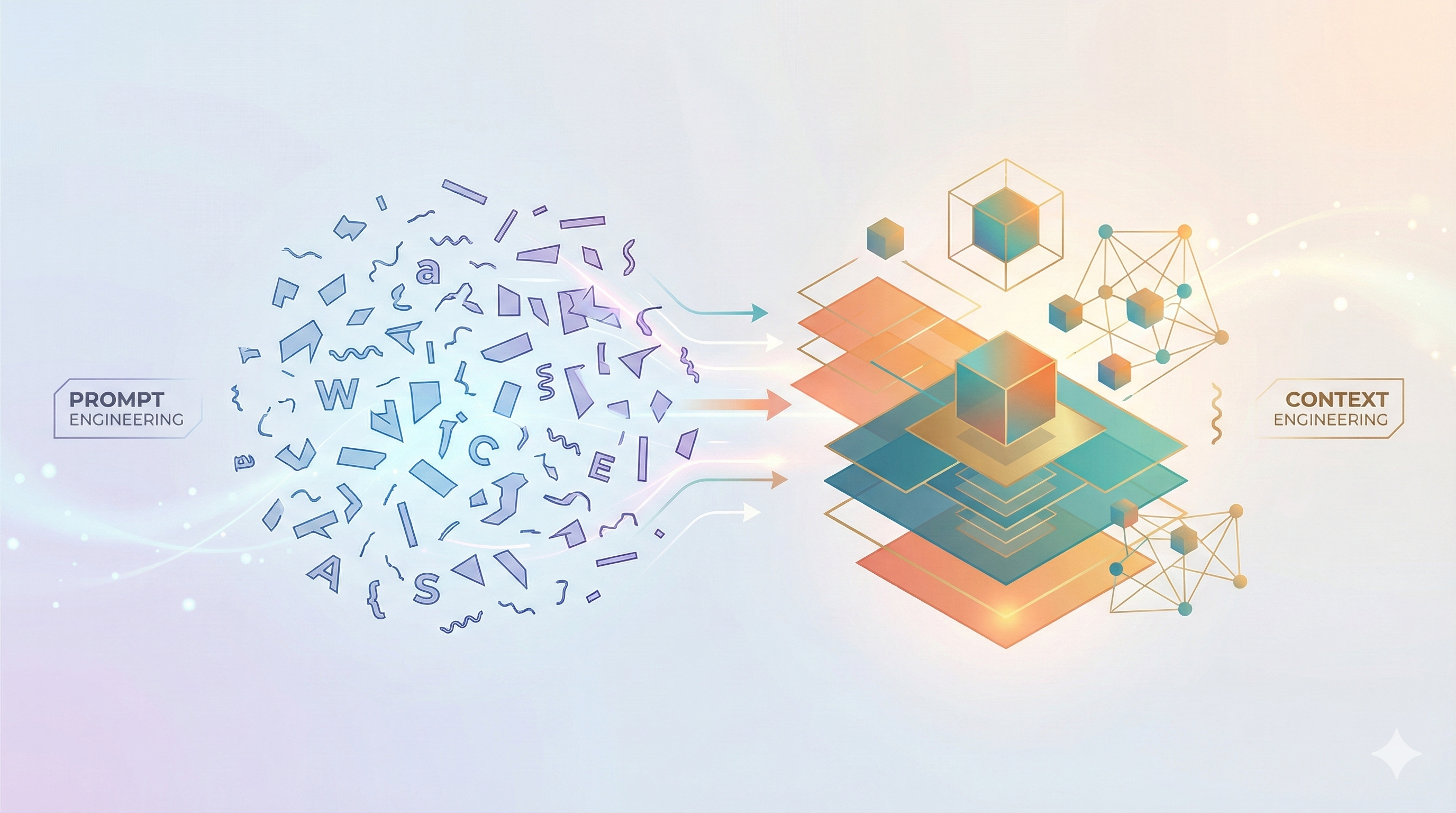

Prompt Engineering Is Dead.

Long Live Context Engineering.

We’ve all been there.

You rewrite the prompt. You add “think step by step.” You throw in a few examples and hope for the best.

Sometimes it works. Sometimes the model confidently hallucinates anyway.

At first, this feels random. Unpredictable. Almost magical.

But here’s the uncomfortable truth:

The problem isn’t how we prompt. The problem is what we think a prompt is.

Modern LLM systems don’t run on a single string of text anymore. They run on memory, tools, retrieved knowledge, system rules, and hidden state all stitched together at inference time.

Prompt engineering was never designed to handle that.

Context engineering was.

Why Prompt Engineering Hit a Wall

Prompt engineering assumes something simple:

If you write the right words, the model will behave correctly.

This worked briefly when models were smaller and systems were simpler. But as LLMs scaled and moved into production, cracks started to show.

Prompt-based systems are:

- Static — no real memory or learning

- Brittle — small wording changes cause large behavior shifts

- Hard to debug — when things break, you don’t know why

- Poor at scale — longer prompts often make performance worse, not better

Worse, adding more context often increases hallucinations instead of reducing them.

That’s not bad luck.

That’s a sign the abstraction itself is breaking.

The Hidden Assumption Behind Prompts

Under the hood, prompt engineering treats an LLM like this:

Where:

- is “the prompt”

- is the generated output

Everything important is assumed to live inside .

This abstraction used to be “good enough.”

But it no longer matches reality.

What the Model Actually Sees

By the time a modern LLM generates its first token, its “prompt” already includes:

- System instructions

- User intent

- Retrieved documents

- Tool schemas

- Memory from past interactions

- Agent or environment state

- Safety rules

- Output constraints

The prompt is just the final serialization step.

What actually matters is how all of this information is assembled.

Formally, context now looks like this:

Where:

- are different context components

- is a dynamic assembly function

This is the shift.

From writing text → to designing systems.

Context Is Not Text — It’s a Structured Object

Each part of context plays a different role:

- — system rules and policies

- — the user’s request

- — retrieved external knowledge

- — persistent memory

- — available actions

- — environment or agent state

Prompt engineering flattens all of this into a single string.

Context engineering preserves structure until the last possible moment.

That single decision explains:

- why long prompts degrade performance

- why ordering suddenly matters

- why “more context” can reduce accuracy

From Optimizing Words to Optimizing Pipelines

Once you see context as something assembled, not written, the problem changes.

You’re no longer optimizing text. You’re optimizing functions.

At a high level, context engineering becomes:

Where:

- represents retrieval, selection, compression, and assembly

- is the task distribution

This is why prompt tuning feels random.

You’re tweaking one variable in a multi-stage system.

Retrieval Isn’t About Similarity , It’s About Usefulness

Most RAG systems optimize similarity scores.

Context engineering asks a sharper question:

How much does this context reduce uncertainty about the answer?

Formally:

Where is mutual information.

This explains why:

- short, targeted snippets beat long documents

- graph-based retrieval outperforms keyword search

- agentic RAG beats static RAG

You’re not feeding the model more knowledge.

You’re feeding it less uncertainty.

Why This Shift Matters in Practice

Context engineering changes how systems are built and debugged.

Instead of asking:

- “What prompt works?”

You ask:

- “What context should exist at this moment?”

That enables:

- Better debugging — isolate failures to retrievers, memory, or tools

- Better scalability — modular pipelines instead of giant prompts

- Clear separation of concerns — retrieval, processing, and management evolve independently

This is why modern systems debug retrievers, not prompts.

The Real Takeaway

Prompt engineering isn’t useless.

It’s just incomplete.

Prompt engineering is a subset of context engineering not its successor.

Context engineering is what happens when we stop pretending LLMs live in a text box and start designing the machinery that feeds their intelligence.

Once you see that shift, there’s no going back.